Yasmin Milligan and Cecilia Askvik unpack the privacy regulator’s AI guidance on the use of commercially available AI products and explore other initiatives in regulation of AI in Australia. This insight highlights the key privacy insights your business needs to navigate AI-related privacy risks – demonstrating how robust privacy practices and compliance can mitigate these risks and allow for safe and responsible AI innovation.

Read on for the latest in KWM’s inhouse-centred series: From our inhouse to yours.

The adoption of artificial intelligence (AI) is rapidly accelerating across society, with most businesses already integrating AI into their operations and seeking new opportunities for innovation. Unlike Europe and the EU AI Act, Australia does not currently have dedicated AI legislation but has so far taken a voluntary approach to AI regulation.

In 2019, Australia adopted 8 voluntary AI Ethics principles to encourage businesses to carefully consider the impacts of using AI. Since then, the Government has conducted public consultations on “Safe and Responsible AI in Australia” acknowledging that Australia’s existing laws do not adequately address the risks presented by AI and that new regulatory approaches may be needed. In response, several voluntary standards and policies have been published to guide organisations on how to develop and deploy AI safely and reliable, but the Government is also looking at introducing mandatory guardrails for AI in “high-risk settings”.

However, this does not mean that AI is currently unregulated in Australia; there are several guidance notes and policies organisations should consider when developing and using AI. Notably, the Office of the Australian Information Commissioner (OAIC) published guidance last year on how existing Australian privacy laws apply to the use and development of AI by businesses. This guidance clarifies the application of existing privacy laws to AI and outlines the privacy regulator’s expectations for businesses to ensure compliance with privacy regulations in the context of AI.

Inhouse counsel must be across the OAIC’s AI guidance to ensure the business complies with Australian privacy law and follows best privacy practice when using or developing AI.

A closer look at the two AI privacy guides

The OAIC has issued two sets of guidance: one for organisations that use commercially available AI products, and another for those developing and training generative AI models.

When do they apply?

The guides emphasise that the Privacy Act 1988 (Cth)(Privacy Act) applies to any use of AI involving personal information or any collection, use or disclosure of personal information to train and develop generative AI models.

Both guidance notes focus on generative AI, though the principles highlighted may still be relevant for other forms of AI. The guides make it clear that while generative AI models present certain privacy risks, other forms of AI can also present significant privacy risks and adverse impacts.

Organisations must bear in mind that the guidance notes only highlight the key privacy obligations under the Privacy Act and are not meant to be an exhaustive overview of all applicable privacy obligations.

Other relevant frameworks

This insight will not provide a detailed overview of the various voluntary AI principles, standards, and the proposed mandatory AI guardrails. However, our experts in the Technology space maintain a comprehensive map of AI regulation in Australia as well as a Tech Regulation Tracker and have published insights on both the Voluntary AI Safety Standard and proposed mandatory guardrails.

The Government released the Voluntary AI Safety Standard in September 2024, which outlines 10 voluntary guardrails and provides practical guidance for organisations to safely and responsibly use and innovate with AI. The standard clarifies that its purpose is to help organisations use and deploy AI systems within the bounds of existing Australian laws and regulatory guidance.

Similarly, while both OAIC guidance notes primarily focus on helping organisations comply with the Privacy Act, both guides clarifies that they will support organisations aiming to adhere to the Voluntary AI Safety guardrails.

Privacy and the use of commercially available AI products

This insight will focus on the OAIC’s guidance for the use of commercially available AI products, although many of the underlying privacy principles are the same as the ones set out in the separate guidance for developing and training AI models.

Who does it apply to?

The OAIC’s guidance on privacy and the use of commercially available AI products applies to organisations that deploy AI systems that have been built with, collect, store or disclose personal information, and use the AI system to provide products or services regardless of whether it is for internal or external purposes. It also applies to the use of publicly accessible AI tools.

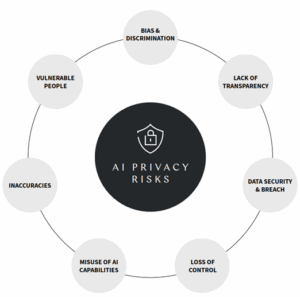

Data privacy risks in AI

The guidance highlights several significant privacy risks associated with the use of different forms of AI:

Navigating privacy rules in AI adoption

As discussed earlier, all uses of AI involving personal information must comply with the Privacy Act and the Australian Privacy Principles (APPs).

Importantly, the OAIC has stressed that, as a best privacy practice, organisations should not enter any personal or sensitive information into publicly available generative AI tools. This aligns with the third voluntary guardrail which emphasises the need to implement data governance, privacy and cyber security measures to manage and protect data when using AI.

The OAIC’s guidance note on the use of AI outlines several key privacy obligations your organisation must address when adopting and implementing AI:

Other relevant privacy principle that businesses should consider, but that are not discussed in detail in the guidance include:

Ensuring trust: Due diligence for AI solutions

Before selecting an AI product, your business must conduct due diligence of the product to not only ensure the product delivers fair and reasonable outcomes supporting your business objectives, but also to identify and address legal, ethical, privacy and operational risks before deploying AI. It is essential to safeguard that any AI products your business intends to use is lawful, ethical, secure and protects both individuals and organisations from harm.

This is also highlighted in the eighth voluntary guardrail requiring all organisations in the AI supply chain to be transparent and provide information about the data, models and systems to understand and manage risks.

AI Due Diligence will involve:

- Assessing intended use: carefully evaluate the purposes for which the AI product will be used, including whether such uses involve high privacy risk activities and if the product is appropriate. Consider whether the same purposes can be achieved without using AI.

- Conducting Privacy Impact Assessments: As detailed above, PIAs should be undertaken to identify and address potential privacy risks associated with the use of the AI product.

- Reviewing security risks: Assessing the security risks related to using the AI product to ensure the AI system can be deployed safely, while also ensuring compliance with APP 11 and other relevant security obligations.

- Review terms and settings: Review the terms and settings governing your organisation’s use of the relevant AI product. This includes determining whether the developer or any third parties will have access to data generated through your organisation’s use of the product.

Getting specific with AI data purposes

As noted earlier, APP 6 – which governs the use and disclosure of personal information – is a key privacy principle for any business planning to adopt AI products to consider.

The OAIC’s guidance on the use of AI clarifies that if an organisation inputs personal information into an AI system it will be:

- a use of personal information if the information stays within the organisation’s control, or

- a disclosure of personal information if the information is made accessible to others outside the organisation and the organisation releases effective control over the information.

If your organisation plans to use personal information in an AI system, you must carefully consider the primary purposes for which the information was collected and ensure it aligns with the purpose for which it is intended to be used or disclosed in the AI system. These purposes must be clearly and narrowly framed; they cannot be described in broad terms to justify an AI-related use. For example, if due diligence reveals that data will be shared with developers or third parties, such disclosure must comply with APP 6.

One of the exceptions allowing personal information to be used or disclosed for a secondary purpose is when it is reasonably expected by the individual. For this exception to apply individual must have been properly informed at the time of collection (see more under ‘Transparency’ above). However, the OAIC has noted that given the significant privacy risks and community concerns associated with AI, it will be difficult for organisations to justify secondary uses of personal information for AI as falling within reasonable expectations of individuals. Instead, organisations should seek consent.

For sensitive information, any use or disclosure for a secondary purpose must be directly related to the primary purpose, and consent must be obtained.

Inhouse counsel are well positioned to help the business determine whether a proposed use or disclosure of personal information aligns with the primary purpose or qualifies as an authorised secondary purpose.

Enabling responsible AI use through privacy compliance

When comparing the privacy risks highlighted above with the key privacy obligations highlighted by the OAIC in its guidance, it becomes clear that many obligations under the Privacy Act directly address the privacy risks and concerns associated with AI. By prioritising compliance with privacy requirements, following best privacy practices, and adopting the voluntary guardrails, your organisation can significantly reduce privacy risks and enable your organisation to safely and responsibly use and innovate with AI.

With the Voluntary Safety Standards already in place – closely mirroring the proposed Mandatory Guardrails – it is essential for organisations intending to use or develop AI to stay across and ensure compliance with the OAIC’s two AI guides and the Voluntary Guardrails. Adhering to these should be a central part of building robust AI governance frameworks.

Further information

If you or someone in your organisation need further guidance on this topic, please reach out to one of our experts in our Technology space, Cheng Lim, Sector Lead, Technology, Media, Entertainment and Telecommunications Partner, Melbourne, whose contact details are here.

Check out other insights from the Office of General Counsel team here and subscribe to KWM Pulse using the button below to stay across upcoming insights in areas of interest.

If you want a particular topic covered by our From Our Inhouse To Yours Pulse series, please reach out to the Inhouse Counsel Series editor, Yasmin Milligan, via LinkedIn.