In recognition of Data Privacy Day, Cecilia Askvik considers the changes to transparency requirements under the Privacy Act for the use of automated decision making set to take effect later this year, and how organisations can prepare for the new obligations.

Read on for the latest in KWM’s inhouse-centred series: From our inhouse to yours.

28 January marks Data Privacy Day, also known as Data Protection Day. Data Privacy Day is an annual event celebrated internationally to raise awareness on the right to data protection and privacy. It commemorates the signing of the Council of Europe’s Convention 108 in 1981, the first legally binding international treaty to protect privacy in the digital age.

In today’s rapidly changing digital landscape, Data Privacy Day serves as a timely reminder for organisations of their responsibility to safeguard privacy, particularly as emerging technologies such as artificial intelligence and automated decision making become more prevalent. As these technologies continue to evolve, it is essential for organisations to establish robust privacy practices to safeguard the right to privacy. This includes ensuring transparency about how personal information is used in these technologies.

The privacy reforms passed in 2024 introduced new transparency rules for organisations’ use of automated decision making systems, which will commence on 10 December 2026. For inhouse counsel, it is essential to be across the new transparency requirements for the use of automated decision making and actively support your organisation in taking a proactive approach to identify automated decision making processes and preparing for compliance with the new requirements.

This is particularly timely, as the privacy regulator has announced it will start 2026 by reviewing selected businesses privacy policies in its first ever privacy compliance sweep.

What is automated decision making?

An automated decision making (ADM) system is a computerised process used to assists or replace the judgment involved in human decision-making. ADM systems can range from simple rule-based formulas to complex systems powered by AI, with varying levels of sophistication and impact on individuals.

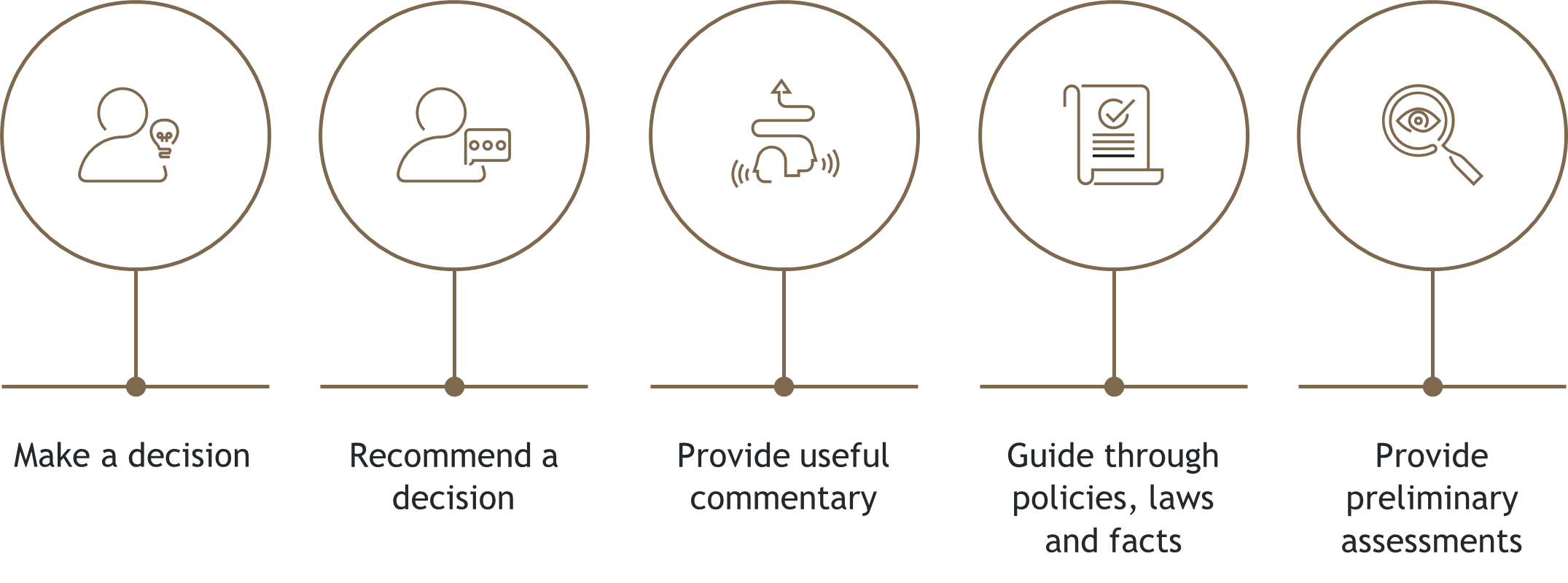

ADM can be used to:

Privacy implications of ADM systems

ADM systems often rely on the use of personal information, raising significant privacy concerns, particularly regarding the transparency and integrity of decisions made using ADM systems.

The use of historical data to train ADM systems can further amplify privacy risks and have significant impacts on individuals’ rights and interest, as historical data may reflect prejudices and under-representation of minorities in training datasets. This may lead to unfair treatment and discrimination, for example by continuously making decisions that disadvantage women.

Also, ADM systems often require large amounts of data from a range of sources and may retain data for longer than necessary.

Current transparency obligations under the Privacy Act

Australian Privacy Principle 1 (APP) requires APP entities to manage personal information in an open and transparent way. This includes a requirement for APP entities to have a clearly expressed and up-to-date Privacy Policy setting out how personal information is managed. The Privacy Policy must be available free of charge and in an appropriate form.

The Privacy Policy must include certain information, such as the types of personal information collected, how it is collected and held, and for what purposes it is collected, used, and disclosed (APP 1.4).

APP 1 also requires an APP entity to implement practices, procedures and systems relating to its functions and activities to ensure it complies with the APPs. APP entities are therefore recommended to have a program of proactive review and audit of the adequacy and currency of their privacy policies.

The new transparency requirements

The Privacy and Other Legislation Amendment Act 2024 (Cth) was passed in December 2024 and implements the first tranche of reforms to the Privacy Act 1988 (Cth)(Privacy Act).

One of the key reforms passed is a new obligation for APP entities to ensure their privacy policies include information about the use of automated decision making (APP 1.7 – 1.9).

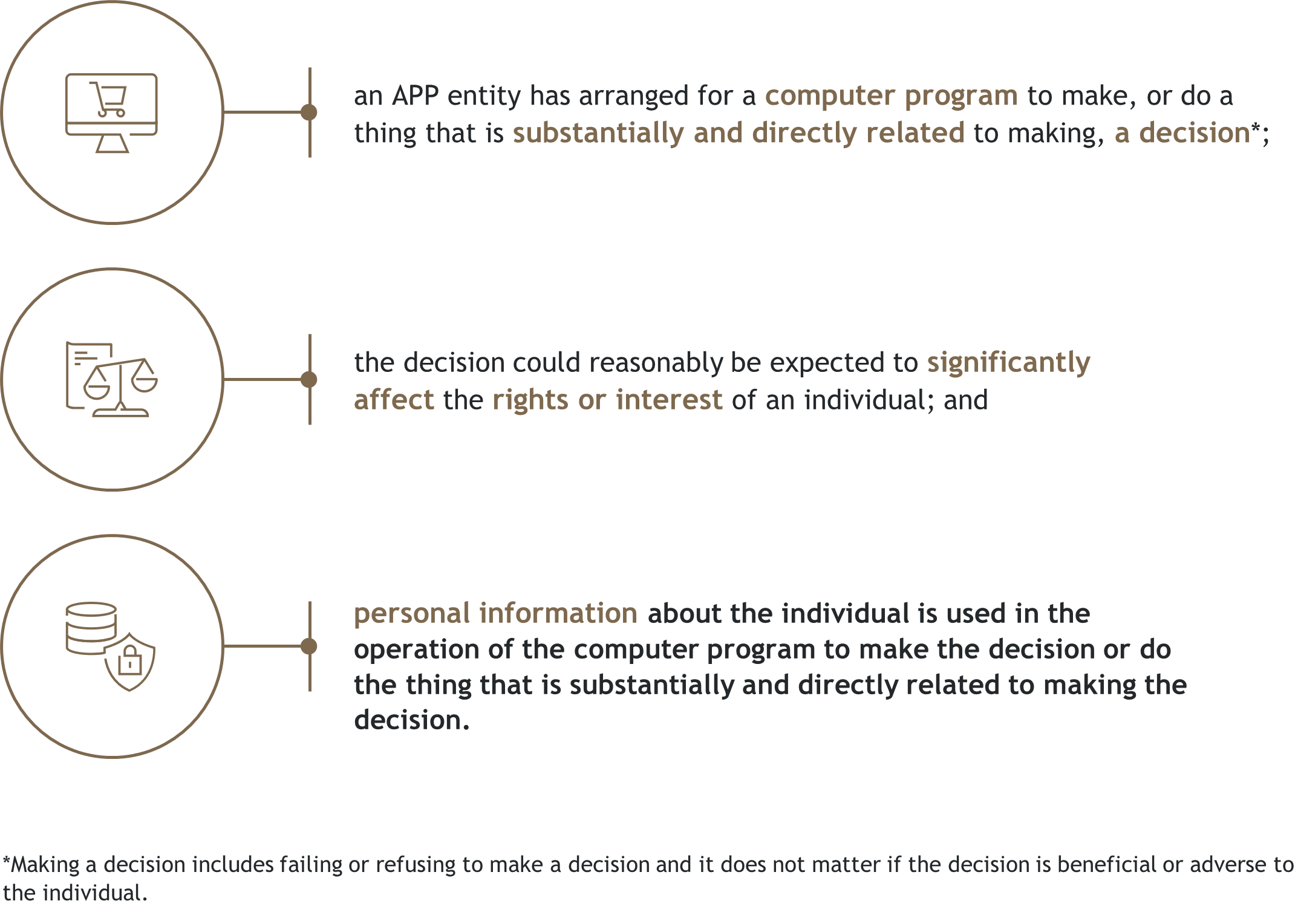

The new requirements apply where:

What decisions are covered?

Only decisions that are made entirely by a computer program – such as AI or ADM systems – or those that are substantially made or influenced by a computer program must be included in an entity’s Privacy Policy.

This means that the new transparency requirements apply even when there is a ‘human in the loop’; if the computer program has a substantial role in making or influencing the decision-making, it must be disclosed in the Privacy Policy.

Equally, the mere use of a computer program in a decision-making does not automatically bring a decision-making process within scope.

When and where are ADM systems typically used?

Some sectors that increasingly use ADM systems to aid decision-making include healthcare, insurance, banking and finance, marketing and advertising, and taxation.

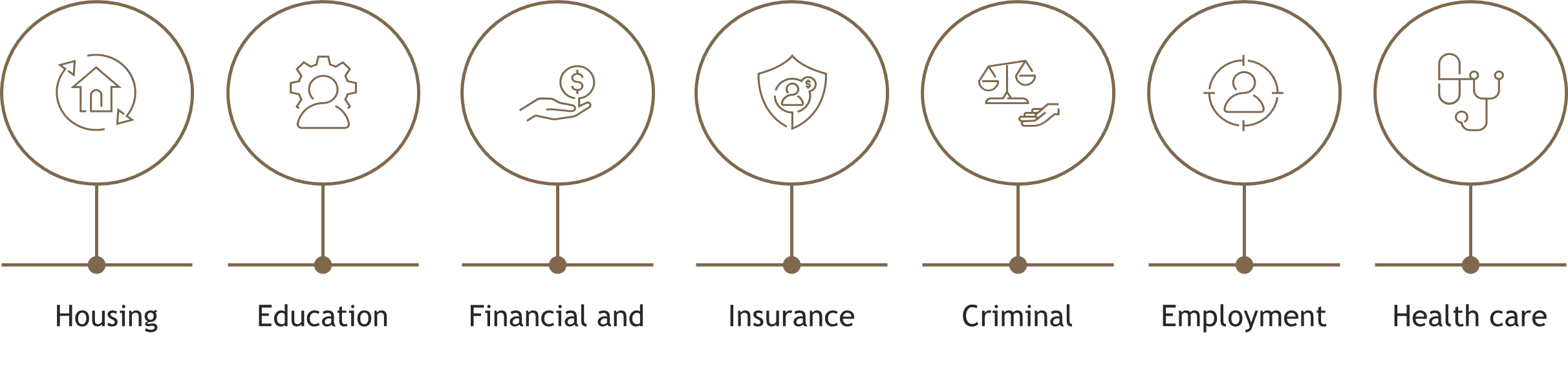

ADM systems could be used to make decisions that:

- Affect an individual’s access to health services.

- Deny an individual an employment opportunity.

- Affect an individual’s access to education.

Examples of decisions made by an ADM system that may affect the rights or interest of individuals include:

- Automatic refusal of an online credit application.

- Differential pricing resulting in prohibitively high prices that effectively bar someone from certain goods or services.

- Reducing a customer’s credit card limit based on analysis of other customers.

- An aptitude test used for recruitment which uses pre-programmed algorithms and criteria.

In its response to the Privacy Act Review Report, the Australian Government also highlighted examples of decisions that could have a legal or significant effect on individuals’ rights and interests. These include the denial of consequential services or support such as:

What information must be included in the Privacy Policy?

The Privacy Policy must include information about the following matters in relation to an organisation’s use of ADM (APP 1.8):

- the types of personal information used in the operation of the ADM system;

- the types of decisions made solely by the ADM system; and

- the types of decisions for which a thing, that is substantially and directly related to making the decision, is done by the operation of the ADM system.

However, the OAIC’s guidance on APP 1 notes that a privacy policy is not expected to contain every detail about an organisation’s practices, procedures and systems. Entities are welcome to choose the style and format for how they present their privacy policies. They are also encouraged to use a layered approach to provide the required information if suitable.

Increased regulatory scrutiny: privacy policy reviews in 2026

Late last year, the OAIC announced its first-ever privacy compliance sweep, set to begin in the first week of January 2026. This initiative will involve a targeted review of selected businesses’ privacy policies to ensure compliance with the transparency obligations under the Privacy Act.

The sweep will target businesses that collect personal information in person in sectors such as rental and property, car rental companies and car dealerships, and chemists and pharmacists.

In addition to new transparency requirements, the privacy reforms passed in December 2024 granted the OAIC expanded regulatory powers. These include the power to issue infringement and compliance notices and impose penalties of up to $66,000 for breaches of certain APPs, such as a having a non-compliant privacy policy under APP 1.4.

The privacy compliance sweep is not the only action taken by the regulator recently in relation to transparency. On 21 January 2026, the Australian Information Commissioner published a report from a desktop review assessing how transparent Australian Government agencies are about their use of ADM. Although the desktop review related to requirements for government agencies under the FOI Act, it, together with the planned privacy compliance sweep, demonstrates transparency is a key regulatory focus for the OAIC in 2026.

Preparing for compliance

Organisations should undertake a comprehensive review of its systems and decision-making processes to identify which ones may be captured by the new transparency requirements.

This will involve the following steps:

The role of inhouse counsel

With their in-depth understanding of both privacy laws and the business, inhouse counsel should be involved at every stage of this process to ensure all systems involving automated decision making and the use of personal information are properly identified.

Inhouse counsel should also play a leading role in drafting or reviewing any amendments to the Privacy Policy. Their knowledge of the legal and regulatory requirements under APP 1 ensures that any amendments to the Privacy Policy addresses all matters required under APP 1.4. This includes ensuring compliance with APP 1.3, which requires privacy policies to be clearly expressed and include certain information.

The OAIC and the Government have emphasised that privacy policies must be easy to understand and navigate, avoiding unnecessary jargon. Inhouse counsel are well positioned to translate complex legal obligations into clear, practical language. Their involvement is essential not only to ensuring legal compliance but also for fostering transparency and trust with individuals.

The OAIC has announced it will publish guidance on the new transparency requirements in 2026. This forthcoming guidance, along with any reports from the privacy compliance sweep referenced above, will provide valuable guidance for organisations seeking to ensure transparency in their use of ADM systems and in preparing for the upcoming transparency requirements.

Further information

If you or someone in your organisation need further guidance on this topic, please reach out to one of our experts in our Technology space, Cheng Lim, Sector Lead, Technology, Media, Entertainment and Telecommunications Partner, Melbourne, whose contact details are here.

Check out other insights from the Office of General Counsel team here and subscribe to KWM Pulse using the button below to stay across upcoming articles in areas of interest.