Caroline Hayward and Kate Montano demystify GenAI and explain how to use GenAI for legal in-house business cases.

Everyone is talking Artificial Intelligence, Large Language Models, Prompt Design, Generative AI and Responsible Use of AI. But how does it all fit together? How do you start to use it? Where will AI create efficiencies for in-house legal teams? We have been working through these questions ourselves over the past few months – now we share our key learnings with you, to help your Generative AI (GenAI) journey.

How does it all fit together?

Not all AI is created equal. AI models have been used in the legal industry for over a decade. eDiscovery, document review and document verification tools, proofreading products and contract comparison tools are all already part of modern legal practice – expediting routine and repetitive tasks that used to require large teams of paralegals and lawyers.

Now GenAI has joined the party. There are many types of GenAI. For our purposes, Large Language Models (LLMs) are a specific type of GenAI which is used to understand, summarise and generate text-based content. LLMs are trained on various data sets. The user can instruct the LLM using prompts to generate desired text-based content. The LLM produces more relevant, accurate and useful content with well-designed prompts – which is known as prompt design.

In short, LLMs are a text-based form of GenAI, which you can train on various sets of data and prompt to generate new content.

How do you start to use it?

Lawyers are risk-averse by nature. We have all heard about GenAI producing inaccurate content, hallucinations and biased content. These issues have resulted from a combination of factors, including the types of data the algorithms have been trained on, poor prompt design and application to inappropriate business cases. Data security and intellectual property concerns are also relevant- particularly for publicly available GenAI tools.

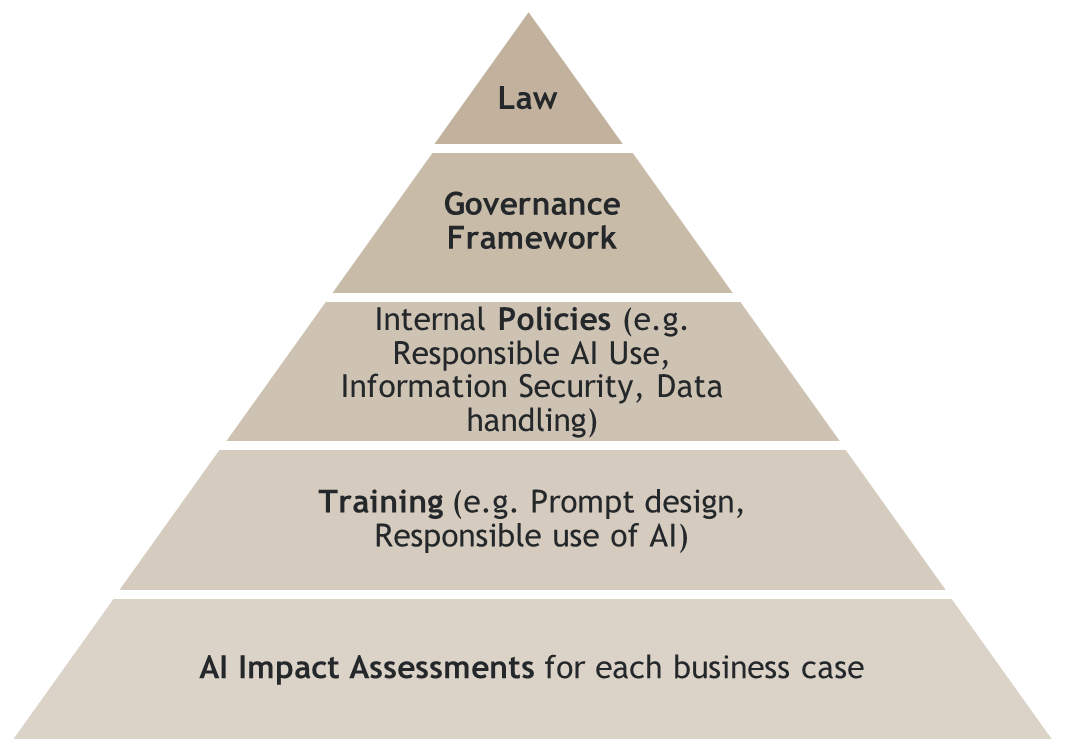

However, we can still realise the benefits of GenAI by putting in place appropriate governance principles and procedures around its use. For example, a Responsible AI Policy can set out how your organisation will use GenAI, any internal approval requirements and how you will handle data used or generated from GenAI in accordance with your internal policies. You could also appoint a Responsible AI Officer and arrange for oversight from your Risk function.

What legal requirements do you need to consider when formulating your governance principles and procedures? The Australian Government has released its AI Ethics Principles.[1] However, currently there is no AI-specific legal framework in place. [2] We still need to consider existing legislative requirements – including applicable privacy laws, such as the Privacy Act 1998 (Cth). Intellectual property issues should also be considered, including ownership of both training data and generated content under the Copyright Act 1968 (Cth). When you assess particular business cases additional issues may arise. AI Impact Assessments can be useful tools when considering each new business case. For example, the use of chatbots in consumer-facing contexts needs to be reviewed for any misleading & deceptive conduct issues under the Australian Consumer Law.

You can also require that your organisation use disclaimers on content generated from GenAI, review content for accuracy and any bias and re-draft content to mitigate copyright issues. Some GenAI tools can be made available on an organisation’s own servers and trained on the organisation’s own data sets, considerably reducing data security concerns, mitigating some copyright issues and improving the quality of generated content.

Once established, responsible use of AI requires constant adaptation to changing circumstances and a commitment to taking reasonable steps to mitigate harm of using AI (for example, obtaining appropriate IP and privacy consents to use data or offsetting energy consumption).

Where will AI create efficiencies for in-house legal teams?

The Australian Financial Review recently reported that one in two lawyers already use GenAI, with in-house lawyers adopting GenAI faster than law firms.[3] We were not surprised by this!

The most promising use cases for in-house legal teams include:

| Summarising detailed content clearly and concisely | Prompt example: Summarise the key recommendations of this government review [insert document] |

| Analysis of recent case law | Prompt example: Conduct an analysis of key trends in data privacy case law [insert copies of cases] |

| Clause comparisons | Prompt example: Compare the following ‘Clause A’ and ‘Clause B’ [insert each of Clause A and Clause B] |

| Simple legal drafting tasks | Prompt example: Pretend you are an Australian lawyer. Draft a sample sale contract for a [widget] manufacturing company. Use these documents as examples [insert precedent documents]. |

| Proofreading | Prompt example: Proofread this document to eliminate grammatical errors and enhance readability [insert document] |

| Idea generation and brainstorming | Prompt example: I am a legal project manager. I need to send a message updating my team about a deadline. Please draft a clear and concise message that communicates urgency without causing unnecessary stress. |

But now the biggest question of all! Did we use GenAI to help us write this article? Yes, we proofread our draft using KWMChat – a KWM-hosted LLM used by the firm, developed in accordance with our own Responsible AI Policy.

If you need further guidance on how to use AI in your in-house team, please contact Kendra Fouracre, Senior Associate at kendra.fouracre@au.kwm.com or Cheng Lim, Partner cheng.lim@au.kwm.com.

Check out other insights from the Office of General Counsel team and subscribe to KWM Pulse using the button below to stay across upcoming articles in areas of interest.